- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on 10-12-2021 06:50 AM

The promise of Postgres JSON

JSON support is the most interesting new Postgres feature of the last few years. It relaxes the primary constraint of SQL databases — the rigid schema structure — by letting you store semi-structured data in your tables alongside other data. It also decisively counters the NoSQL trend by giving users document store-like semantics in a proven, mature database technology.

But while the getters and setters are straightforward, the functions for analysis queries are a bit counterintuitive. In this post, we’ll cover a few common patterns and how to build them out.

Joining on array values

Often, when adding many-to-one data to a table, you’ll start with a simple array. Yet inevitably an unanticipated analysis need will come up, and you’ll need to write joins using the data in the arrays.

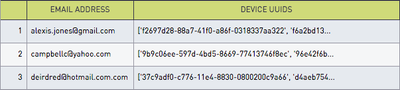

For example, if you stored each user’s device UUIDs in a JSON array, but now you want to join it to our newly-created devices table. The users table might look like this:

Start by breaking the table out into one row per users.id per device_uuid. Do that with the handy json_array_elements function:

select id, json_array_elements(device_uuids) device_uuid

from users

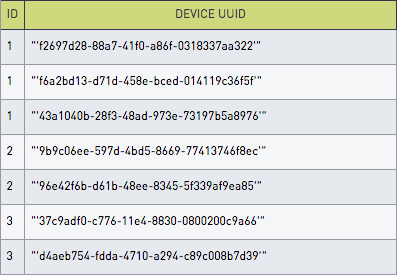

json_array_elements is a set-defining function, meaning it returns more than one row for each row it’s called on. In this case you’re going to get one row per user per device_uuid. Here are the results:

Now there is a device_uuid column. You can finally join users to devices. For example, if you wanted number of users per device platform, do this:

select devices.platform, count(distinct users.id)

from devices join (

select user_id, json_array_elements(device_uuids) device_uuid

from users

) users_with_device_uuids

using (device_uuid)

group by 1

Just subselect your previous query and write a quick group-and-count.

Aggregating over arrays

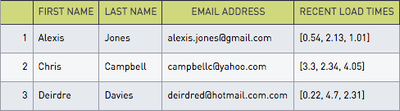

Similarly, you might quickly store individual values in an array, but then need to aggregate those values later. For example, store per-user website load times in an array in the users table like so:

By storing them this way, you’ve made getting per-user average load times more annoying than if you had used a separate pageload_logs table. But, as before, you can create that table on the fly with the json_array_elements function:

select user_id, json_array_elements(load_times) load_time

from users

Now just cast to float and compute a per-user average:

select id, avg(load_time::text::numeric) from (

select id, json_array_elements(load_times) load_time

from users

) t

group by 1

Note the bizarre double-typecast: ::text::numeric. JSON functions will return integers or text but never floating-point values. Fortunately you can cast to text and then to numeric.

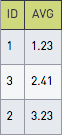

This query gives the results you were looking for:

Finally, you can compute a global average the same way, just without the grouping:

select avg(load_time::text::numeric) from (

select user_id, json_array_elements(load_times) load_time

from users

) t

And now you have the single average load time:

Aggregating object values

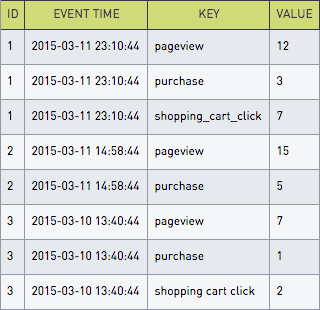

If you’re logging events from mobile devices and other clients, you may be using a JSON column for event data, with a key for each event type and a value for the count. For example, each timestamp might have an object like this:

{

pageview: 12,

shopping_cart_click: 7,

purchase: 3

}

Now sum the total number of events you got on each day. Use the set-defining function json_each_text to split out the keys and values. Start by just selecting the raw keys and values:

select * from events, json_each_text(events.event_data)

Take a good look at that join:

from events, json_each_text(events.event_data)

This is a lateral cartesian join. It’s short for:

from events cross join lateral json_each_text(events.event_data)

The second half of the join references the table in the first half of the join, restricting the keys and values to the right event.

This gives the results you expect:

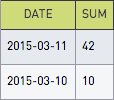

Given that you have the keys and values right there in the join, it is possible to rewrite the query with a simple group-and-count:

select date(event_time), sum(value::float)

from events, json_each_text(events.event_data)

group by 1

Note the cast of value to float. Choose json_each_text instead of json_each because it returns text, which can be cast directly to float.

Now you’ve got the total events per day.

Building blocks

Favorite trick: split the JSON data out into rows and then aggregate. The best functions for doing this are json_array_elements for arrays and json_each_text for objects. Paired with a little creative join logic, you’ll be analyzing JSON data in no time.

Recommended Quick Links

- Community FAQs

- Community Welcome & Guidelines

- Discussion Posting Tips

- Partner Guidelines

- Profile Settings

- Ranks & Badges

Developers:

Product Feedback Forum:

Need additional support?:

The Legal Stuff

Have a question about the Sisense Community?

Email [email protected]